What is AWS Wickr?

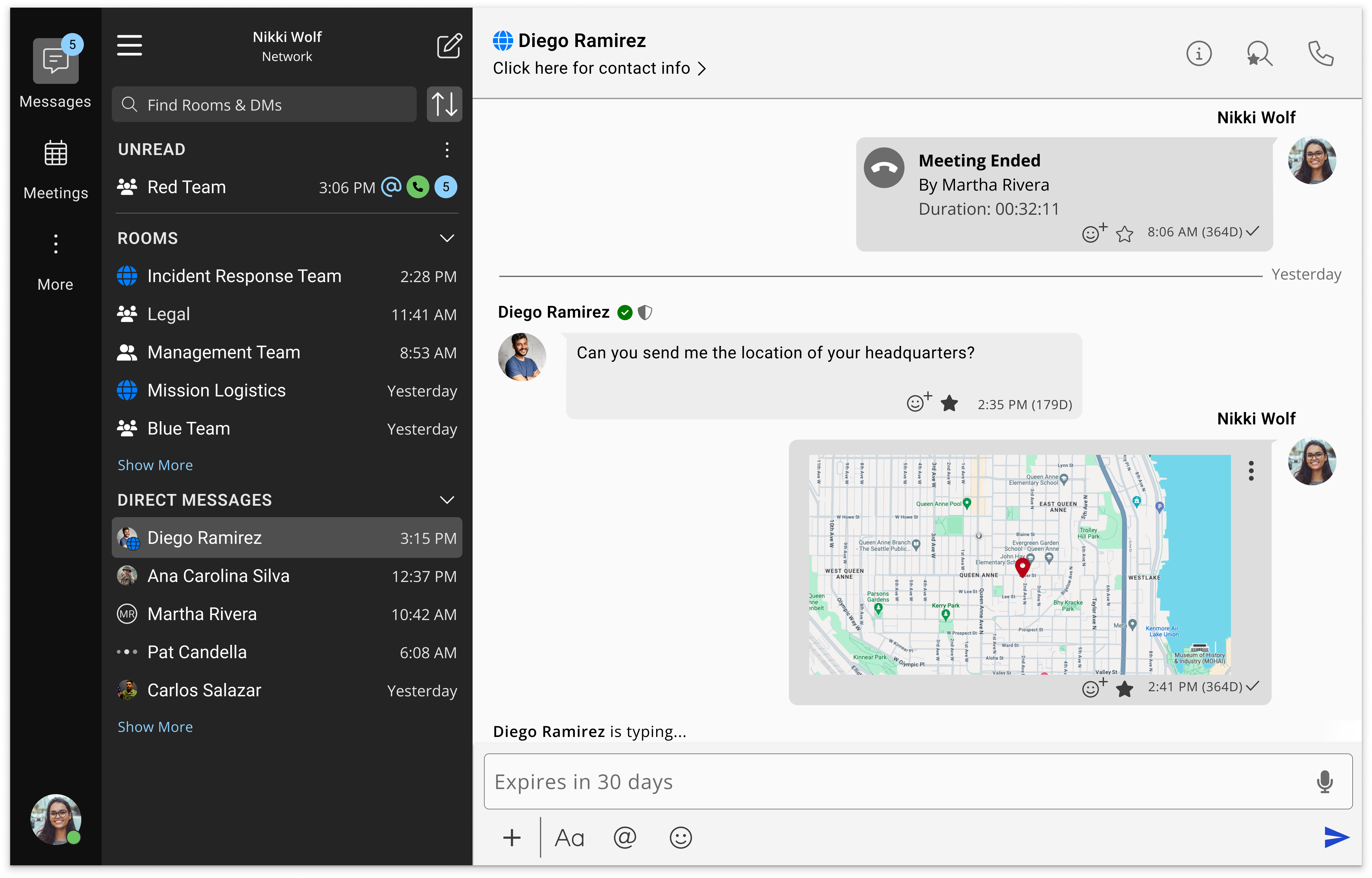

AWS Wickr is a secure collaboration application that transforms how organizations protect their communications. With specialized capabilities for executives, security teams, field operators, and anyone managing sensitive information, AWS Wickr helps every team member communicate confidently while maintaining data privacy. Leveraging AWS Wickr's end-to-end encryption and administrative controls, organizations can safeguard critical conversations, collaborate securely with partners, and ensure sensitive information stays protected.

Benefits

Keep security at the core of your communications

Our zero-trust architecture ensures complete message privacy with end-to-end encryption. No one—not even AWS—can access your messages, calls, or files. Only intended recipients have the keys to decrypt content, giving you true control over sensitive communications.

Enable secure collaboration

Connect and collaborate with external partners while maintaining security boundaries through federation capabilities. Share files up to 5GB, conduct video calls with up to 100 participants, and communicate across organizational lines without compromising security.

Control your communication environment

Manage your AWS Wickr network through the AWS Management Console with comprehensive administrative controls. Create security groups, enforce policies, maintain audit trails, and configure data retention for both internal and external communications—all from a centralized dashboard.